December 5th at 3:24pm

AI Theremin

The Theremin is an iconic electronic musical instrument known for its hands-free gesture-based control of pitch and volume. While it has historical significance and unique charm in music composition, traditional Theremins have limitations in terms of tone diversity, style adaptability, and integration with modern technologies. With the rapid advancements in generative AI technologies like LLMs (Large Language Models) and music generation models, a new opportunity arises to innovate the Theremin's functionality and application in contemporary music creation.

This project introduces an AI-powered Theremin that integrates generative AI with gesture-based performance, creating a groundbreaking interactive music experience. It combines traditional playing characteristics with advanced AI capabilities, addressing limitations in tonal expression, style versatility, and real-time interactivity.

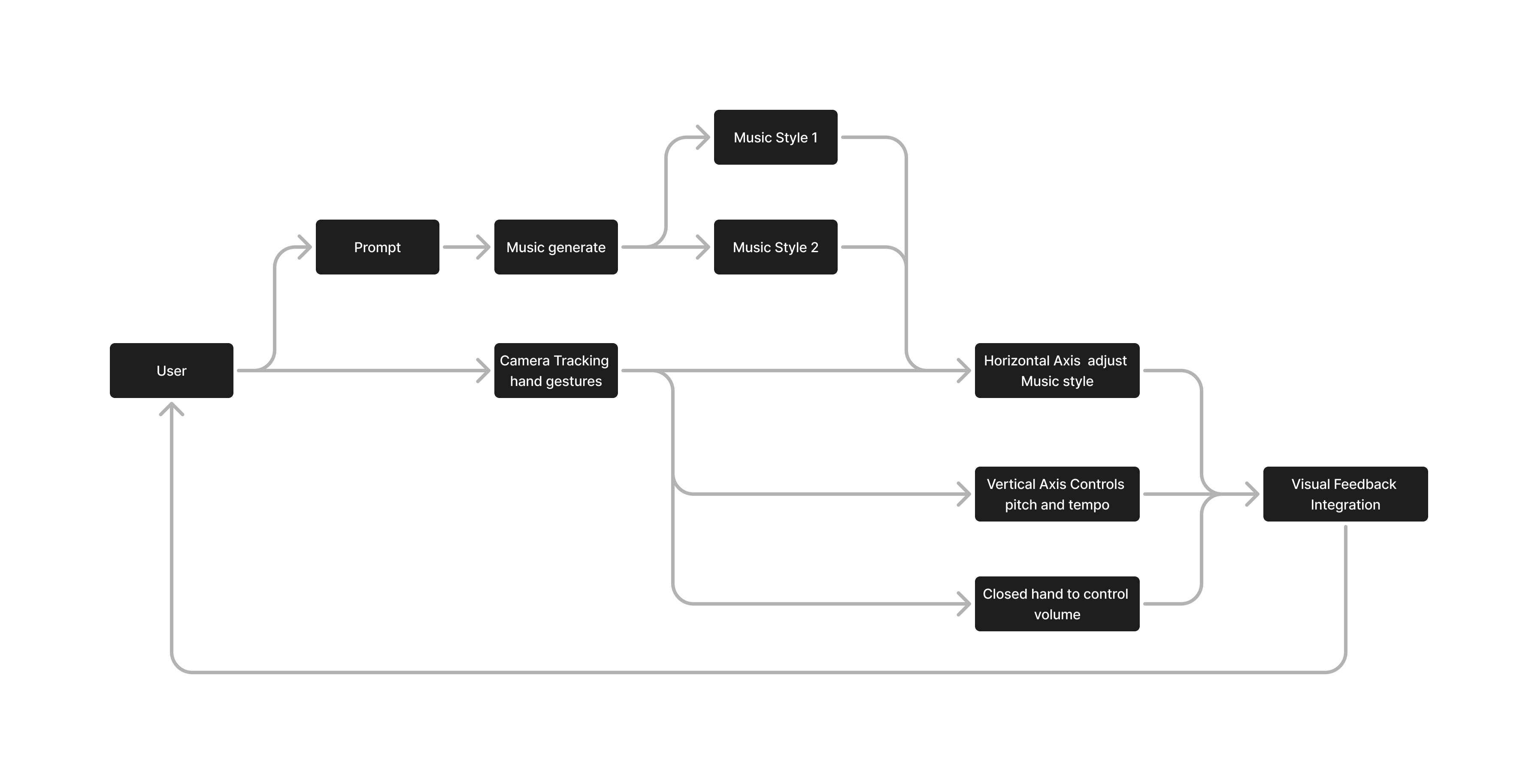

1. Music Prompt Input and AI Generation

Users define the music’s theme through natural language, creating a personalized and engaging creative process. Users input prompts (e.g., “melancholic forest theme”) to define the music's theme, and the system generates two stylistically distinct tracks with synchronized pitches.

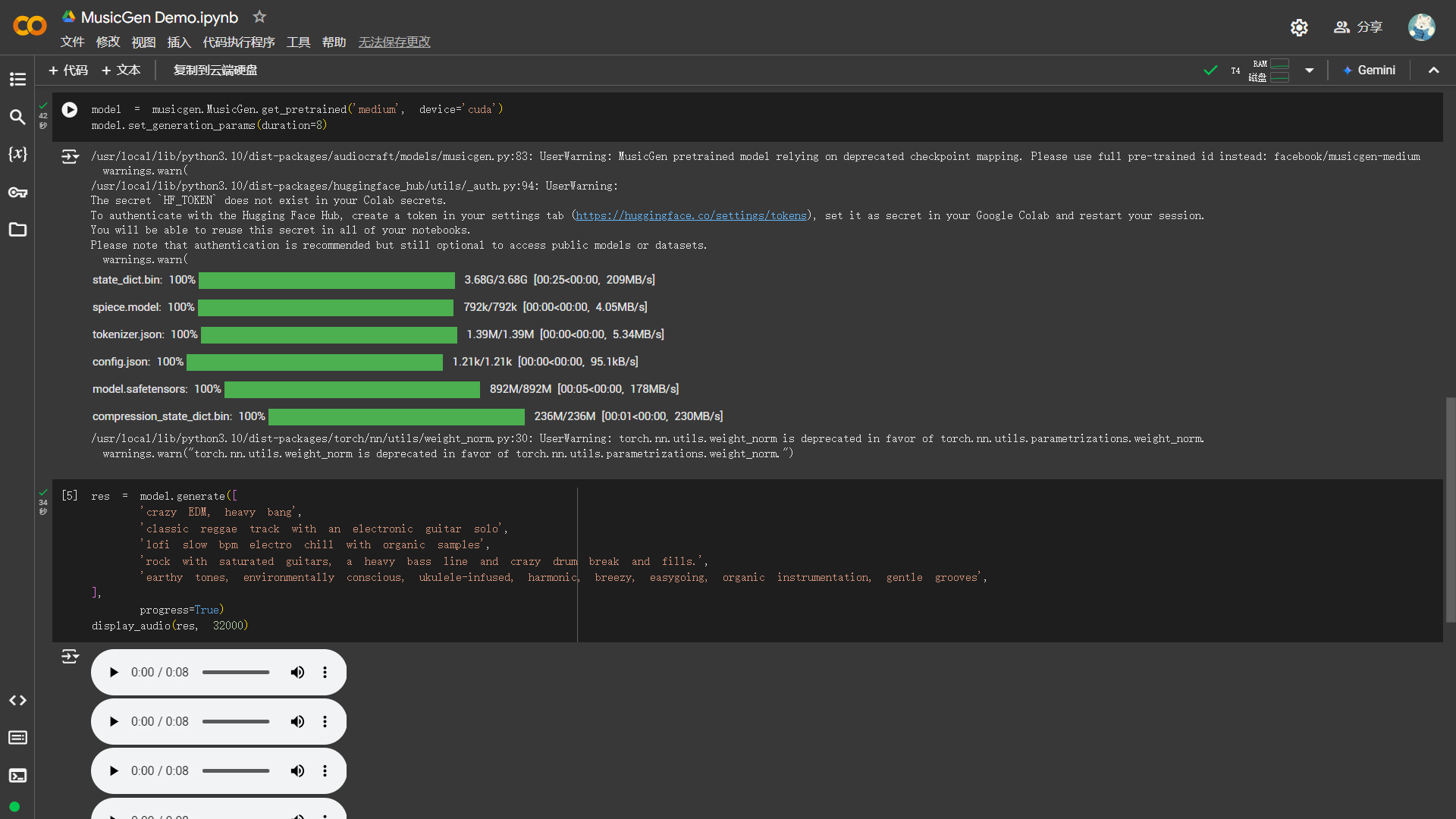

Test Prompt: Folk, A rustic countryside with a musician strumming a simple acoustic guitar, surrounded by rolling green hills and a peaceful sunset, capturing the essence of tradition and storytelling., traditional folk.

AI-Functionality: Utilizes generative AI models (LLM and Music Gen) to create high-quality, monophonic music.

2. Gesture-to-Music Mapping

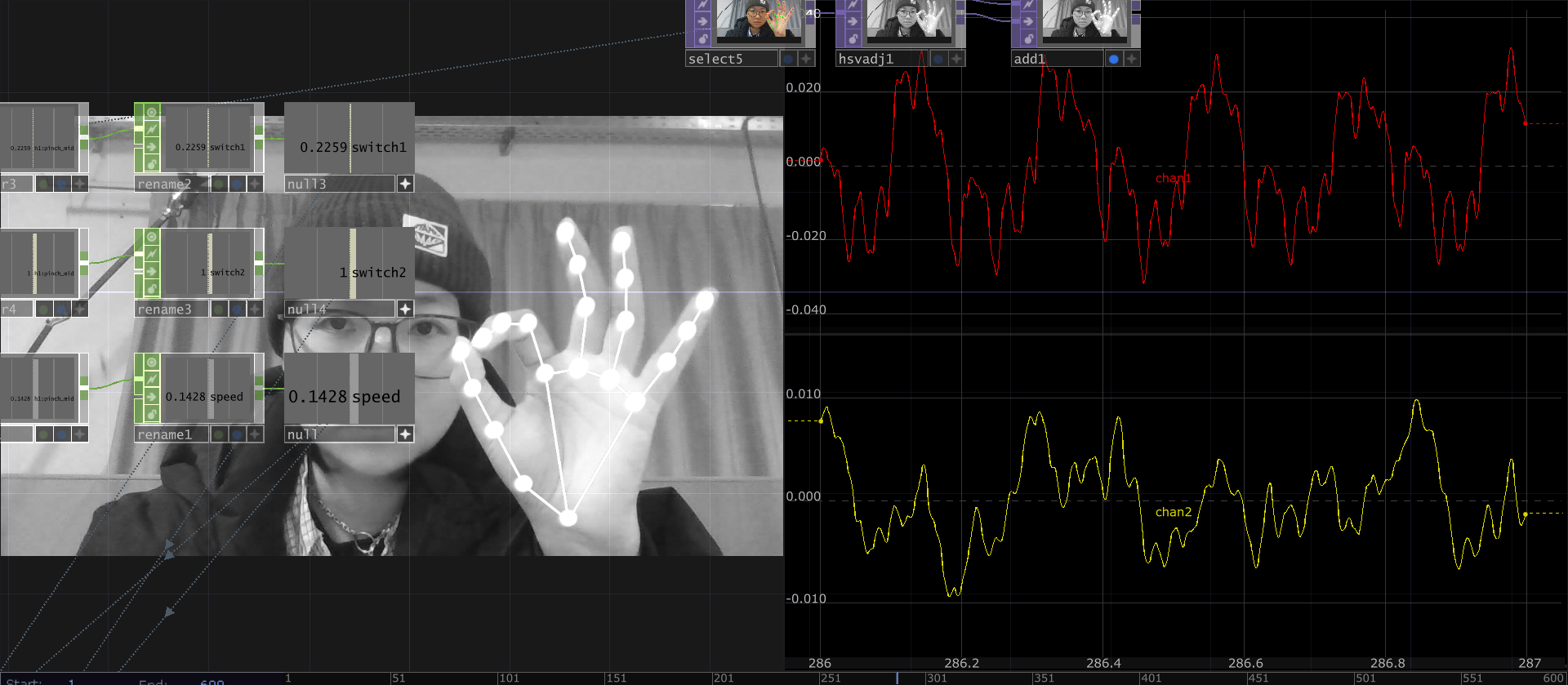

- Hand gestures dynamically map music styles and pitches during performance, enabling rich, real-time expression.

- Horizontal gestures adjust musical styles (e.g., orchestral to guitar), while vertical gestures control pitch and tempo.

- Closed hand to control volume

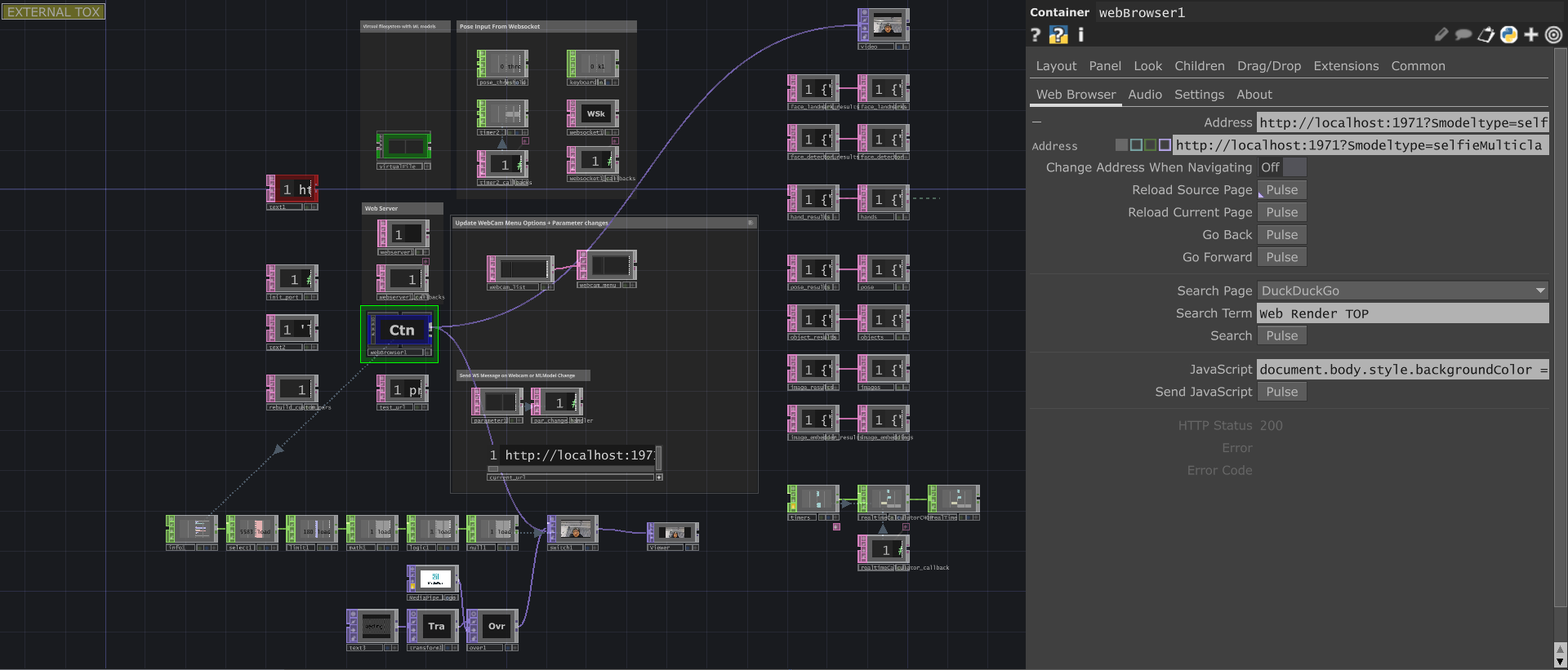

AI-Functionality (based Touchdesigner): A camera system integrates Mediapipe for skeletal tracking and OpenCV for spatial analysis of hand gestures.Real-time adjustments in music playback based on gesture movements ensure an immersive and responsive experience.

Gesture Recognition:

Mediapipe ensures accurate hand tracking, while OpenCV processes spatial data for precise control. This allows for instant music feedback based on gesture changes

Mediapipe, developed by Google, is an open-source framework designed for real-time computer vision and machine learning tasks. Its hand tracking model tracks key hand points (like fingers and wrists) using deep learning, specifically convolutional neural networks (CNN). This allows for accurate gesture recognition in real time. Mediapipe is chosen for its low-latency, high-accuracy hand tracking, which is crucial for synchronizing hand gestures with music adjustments in this project.

OpenCV is an open-source computer vision library used for image processing and analysis. In this project, OpenCV performs spatial analysis on the hand gestures, determining their position, angle, and distance in 3D space. This data directly influences the music's playback, such as pitch, tempo, and style changes. OpenCV’s ability to process images and refine gesture data enhances the accuracy of gesture recognition and synchronization with the music.

3. Visual Feedback Integration(🚧during construction...)

A visual interface displays real-time feedback on gesture recognition, showing the hand’s position on the control axis. Users can immediately see how their movements affect music parameters, allowing them to practice and refine their gestures.

AI-Functionality (based Touchdesigner): GPU-accelerated rendering engines create dynamic visuals corresponding to musical changes.

Visual elements react to pitch, tempo, and style adjustments, enriching the performance atmosphere.

AI-Functionality (based Touchdesigner): GPU-accelerated rendering engines create dynamic visuals corresponding to musical changes.

Visual elements react to pitch, tempo, and style adjustments, enriching the performance atmosphere.